10.0、本文前提

10.1、从新浪采集新闻存储到mysql

10.2、将mysql数据 同步到 Elasticsearch

10.3、从Elasticsearch中搜索数据,返回给前端

10.4、ES新闻搜索实战 —— 查询界面

10.5、ES新闻搜索实战 —— 结果展示

-————————————————–

项目仓库:https://gitee.com/carloz/elastic-learn.git

具体地址:https://gitee.com/carloz/elastic-learn/tree/master/elasticsearch-news

-————————————————–

本文前提

已经部署好了ELK系统

10.1、从新浪采集新闻存储到mysql

http://finance.sina.com.cn/7x24/

1 | # 新浪新闻采集脚本 |

python数据采集脚本

1 | #!/usr/bin/python |

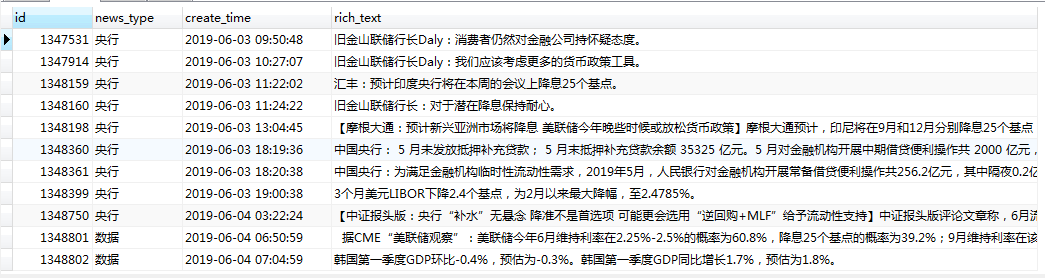

采集到的数据:

10.2、将mysql数据 同步到 Elasticsearch

在Elasticsearch中新建索引:

1 | PUT sina_news |

同步可选技术:

- https://github.com/siddontang/go-mysql-elasticsearch —— 没有实际用于生产的例子,不太懂go;

- https://www.elastic.co/blog/logstash-jdbc-input-plugin —— 全量同步、增量同步,不支持删除;定时任务执行;文档《https://www.cnblogs.com/mignet/p/MySQL_ElasticSearch_sync_By_logstash_jdbc.html》;

- https://github.com/jprante/elasticsearch-jdbc/tree/master

- https://github.com/m358807551/mysqlsmom —— 从binlog同步,支持全量、增量同步,支持删除操作;文档《https://elasticsearch.cn/article/756》;

- https://github.com/mardambey/mypipe —— 写到kafka,需要自己从kafka消费,再处理成json以后写入Elasticsearch;

本次只是一个示例,而且需求里也没有实时同步删除数据,采用官方软件:logstash-input-jdbc;

https://github.com/logstash-plugins/logstash-input-jdbc

https://github.com/logstash-plugins/logstash-input-jdbc/releases

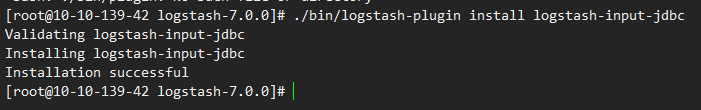

给Logstash安装jdbc插件:

cd /data/carloz/tools/logstash-7.0.0/

./bin/logstash-plugin install logstash-input-jdbc

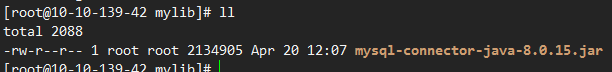

上传jdbc包:

[root@10-10-139-42 logstash-7.0.0]# mkdir -p mylib

[root@10-10-139-42 mylib]# cd /data/carloz/tools/logstash-7.0.0/mylib

上传mysql-connector-java-8.0.15.jar到该目录:

设置配置文件:

[root@10-10-139-42 myconf]# vi sina_news.conf

1 | input { |

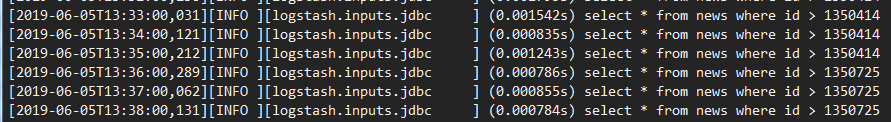

开始同步:

[root@10-10-139-42 logstash-7.0.0]# ./bin/logstash -f myconf/sina_news.conf &

GET sina_news/_search

至此,数据上传成功,并且程序持续监听中。

查看数据:

1 | GET sina_news/_search |

10.3、从Elasticsearch中搜索数据,返回给前端

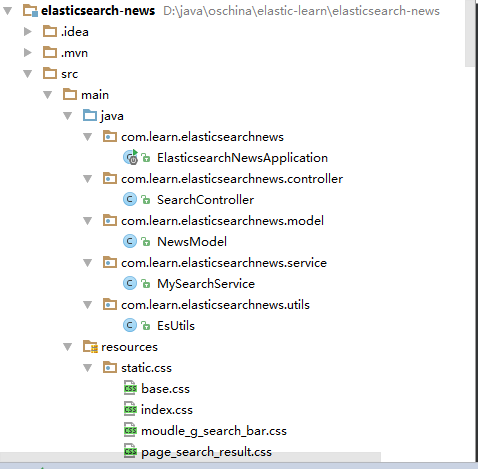

工程结构如图:

使用springboot开发,在pom.xml中键入依赖:

1 |

|

搜索入口

1 | /** |

搜索核心代码:

1 | package com.learn.elasticsearchnews.service; |

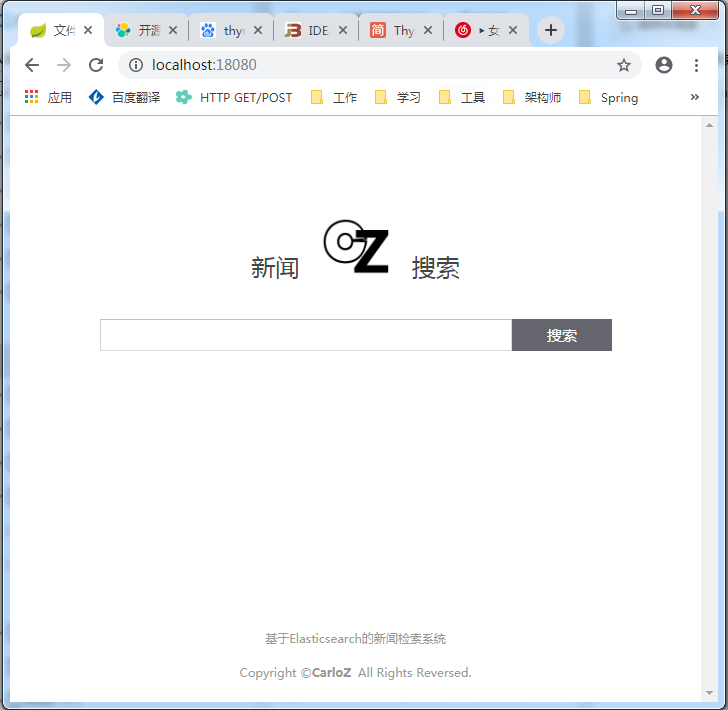

10.4、ES新闻搜索实战 —— 查询界面

在浏览器中访问:http://localhost:18080/

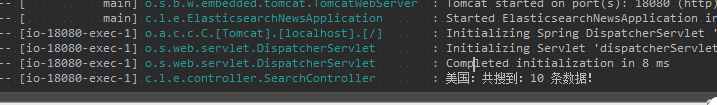

10.5、ES新闻搜索实战 —— 结果展示

在搜索框中搜索“美国”, 因为只是demo,就不做分页了,大家需要自己做即可