1、根据索引查找文件

2、搜索结果调试

3、整个文件如下

-———————————-

代码仓库:https://gitee.com/carloz/lucene-learn.git

https://gitee.com/carloz/lucene-learn/tree/master/lucene-filesearch

-———————————-

1、根据索引查找文件

1 | /** |

2、搜索结果调试

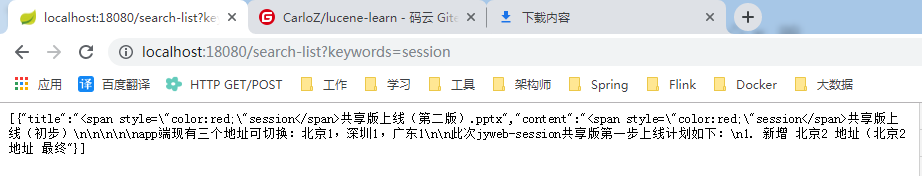

定义接口 search-list 用于调试数据

1 | /** |

http://localhost:18080/search-list?keywords=session

3、整个文件如下

1 | package com.learn.lucenefilesearch.controller; |